IW-D

2.1 GROWING DEPENDENCY, GROWING RISK

The objective of warfare waged against agriculturally-based societies was to gain control over their principal source of wealth: land. Military campaigns were organized to destroy the capacity of an enemy to defend an area of land.

The objective of warfare waged against industrially-based societies was to gain control over their principal source of all wealth: the means of production. Military campaigns were organized to destroy the capacity of the enemy to retain control over sources of raw materials, labor and production capacity.

The objective of warfare to be waged against information-based societies is to gain control over the principal means for the sustenance of all wealth: the capacity for coordination of socio-economic inter-dependencies. Military campaigns will be organized to cripple the capacity of an information-based society to carry out its information-dependent enterprises.

In the U.S. society, over 60 percent of the workforce is engaged in information-related management activities. The value of most wealth producing-resources depends on "knowledge capital" and not on financial assets or masses of labor. Similarly, the doctrine of the U.S. military is now principally based on the superior use of information.

"The joint campaign should fully exploit the information differential, that is, the superior access to and ability to effectively employ information on the strategic, operational and tactical situation which advanced U.S. technologies provide our forces." [Joint Pub. 1, p. IV-9]

The military doctrines shaping U.S. force structure and operational planning assume this information superiority. "Joint Vision 2010 focuses the strengths of each individual Service on operational concepts that achieve Full Spectrum Dominance" This technological view is shared in the Army's "Enterprise Strategy" and "Force XXI Concept of Operations," the Navy's "Forward ... From the Sea," the Air Force's "Global Presence," and the Marine's "Operational Maneuver from the Sea."

The capstone Joint Vision 2010 provides the conceptual template for how America's Armed Forces will channel the vitality and innovation of our people and leverage technological opportunities to achieve new levels of effectiveness in joint warfighting. It addresses the expected continuities and changes in the strategic environment, including technology trends and their implications for our Armed Forces. lt recognizes the crucial importance of our current high- quality, highly trained forces and provides the basis for their further enhancement by prescribing how we will fight in the early 21st century. This vision of future warfighting embodies the improved intelligence and command and control available in the information age and goes on to develop four operational concepts: dominant maneuver, precision engagement, full dimensional protection, and focused logistics.

It is not prudent to expect the U.S. dependence on information-dominated activities for wealth producing and for national security to go unchallenged. In his book, Strategy: the logic of war and peace [ 1987, Belknap Press, pages 27-28], Edward Luttwak notes:

The notion of an 'action-reaction' sequence in the development of new war equipment and newer countermeasures, which induce in turn the development of counter-countermeasures and still newer equipment, is deceptively familiar. That the technical devices of war will be opposed whenever possible by other devices designed specifically against them is obvious enough. Slightly less obvious is the relationship (inevitably paradoxical) between the very success of new devices and their eventual failure: any sensible enemy will focus his most urgent efforts on countermeasures meant to neutralize whatever opposing device seems most dangerous at the time.

The reality is that the vulnerability of the Department of Defense -- and of the nation -- to offensive information warfare attack is largely a self-created problem. Program by program, economic sector by economic sector, we have based critical functions on inadequately protected telecomputing services. In aggregate, we have created a target-rich environment and the U.S. industry has sold globally much of the generic technology that can be used to strike these targets.

Despite the enormous cumulative risk to the nation's defense posture, at the individual program level there still is inadequate understanding of the threat or acceptance of responsibility for the consequences of attacks on individual systems that have the potential to cascade throughout the larger enterprise.

A case examined in some detail by the Task Force was the dependence of the Global Transportation Network on unclassified data sources and the GTN interface to the Global Command and Control System (GCCS). GCCS will continue to increase in importance as it becomes the system of systems through which CINCS, JTFs, and other commanders gain access to more and different information sources. Although GCCS has undergone selected security testing, much remains to be accomplished. For example, security testing to date has focused principally upon Oracle databases and applications evaluation. Other GCCS aspects need thorough security testing; e.g., database applications (Sybase), message functions and configuration management. GTN and GCCS are not unique circumstances. The Global Combat Support System and a long series of Advanced Concepts Technology Demonstrations currently shaping the future of C4ISR follow a remarkably similar pattern: Well-intentioned program managers work very hard to deliver an improved mission capability in a constrained budget environment. The operators they are supporting do not emphasize security and neither operators nor developers are held responsible for the contribution their individual program makes to the collective risk of cascading failure in the event of information warfare attack.

To reduce the danger, all defense investments must be examined from a network- and infrastructure-oriented perspective, recognizing the collective risk that can grow from individual decisions on systems that be connected to a shared infrastructure. Only those programs that can operate without connecting to the global network or those that can operate with an accepted level of risk in a networked information warfare environment should be built. Otherwise, we are paying for the means that an enemy can use to attack and defeat us.

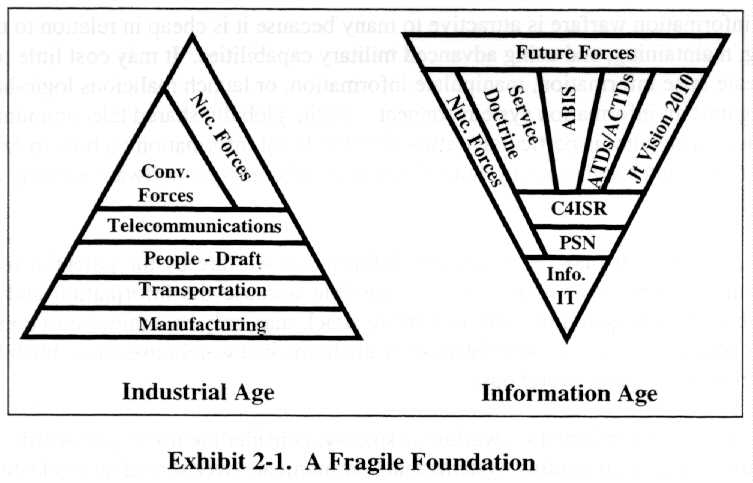

The shift from the industrial age to the information age and the implications are illustrated in Exhibit 2-1.

The United States formerly enjoyed a broad-based manufacturing foundation to support other infrastructures and conventional and nuclear forces. With the increasing dependence on information and information technology, that broad-based foundation has been reduced to a rather narrow base of constantly changing and increasingly vulnerable information and information technology. Service and joint doctrine clearly indicate an increasing dependence of future forces on information and information technology. However, the doctrine of information superiority assumes the availability of the information and information technology-a dangerous assumption. The published Service and joint doctrine does not address the operational implications of a failure of information and information technology.

By analogy, consider the protection implications of adding an aircraft carrier to our force structure. The carrier does not deploy in isolation. It is accompanied by all manner of ships, aircraft, and technology to ensure the protection of the entire battle group: destroyers for picket duty, cruisers for firepower, submarines for subsurface protection, aircraft and radar for early warning, and so on. The United States must begin to consider the implications of protecting its information-age doctrine, tactics, and weapon systems. It can not simply postulate doctrine and tactics which rely so extensively on information and information technology without comparable attention to information and information systems protection and assurance. This attention, backed up with sufficient resources, is the only way the Department can ensure adequate protection of our forces in the face of the inevitable information war.

2.2 INFORMATION WARFARE

Although this task force specifically examined IW-D, it also considered of a few of the concepts behind offensive information warfare to help define the battlefield upon which the defense must operate.

Offensive information warfare is attractive to many because it is cheap in relation to the cost of developing, maintaining, and using advanced military capabilities. It may cost little to suborn an insider, create false information, manipulate information, or launch malicious logic-based weapons against an information system connected to the globally shared telecommunications infrastructure. The latter is particularly attractive; the latest information on how to exploit many of the design attributes and security flaws of commercial computer software is freely available on the Internet.

In addition, the attacker may be attracted to information warfare by the potential for large non- linear outputs from modest inputs. This is possible because the information and information systems subject to offensive information warfare attack may only be a minor cost component of a function or activity of interest-the database of the items in a warehouse costs much less then the physical items stored in the warehouse.

As an example of why information warfare is so easy, consider the use of passwords. We have migrated to distributed computing systems that communicate over shared networks but largely still depend on the use of fixed passwords as the first line of defense -- a carry-over from the days of the stand-alone mainframe computer. We do this even though we know that network analyzers have been and continue to be used by intruders to steal computer addresses, user identities, and user passwords from all the major Internet and unclassified military networks. Intruders then use these stolen identities and passwords to masquerade as legitimate users and enter into systems. Once in, they apply freely available software tools which ensure that they can take control of the computer and erase all traces of their entry.

It is important to stress that strategically important information warfare is not a trivial exercise of hacking into a few computers -- the Task Force does not accept the assertions of the popular press that a few individuals can easily bring the United States to its knees. The Task Force agrees that it is easy for skilled individuals (or less skilled people with suitable automated tools) to break into unprotected and poorly configured networked computers and to steal files, install malicious software, or cause a denial of service. However, it is very much more difficult to collect the intelligence needed and to analyze the designs of complex systems so that an attacker could mount an attack that would cause nation-disrupting or war-ending damage at the time and place and for the duration of the attacker's choosing.

This is not to make light of the power of the common hacker "attack" methods reported in the press. Many of these methods are sufficiently robust to enable significant harassment or large- scale terrorist attacks. The Task Force also acknowledges that malicious software can be emplaced over time with a common time trigger or other means of activation and that the effect could be of the scale of a major concurrent attack. While such an attack cannot be ruled out, the probability of such is assessed to be low. Currently, however, there is no organized effort to monitor for unauthorized changes in operational software even though for the past 3 years unknown intruders have been routinely been penetrating DoD's unclassified computers.

The above assessments do not mean that the threat of offensive information warfare is low or that it can be ignored. The U.S. susceptibility to hostile offensive information warfare is real and will continue to increase until many current practices are abandoned.

Practices that invite attack include poorly designed software applications; the use of overly complex and inherently unsecure computer operating systems; the lack of training and tools for monitoring and managing the telecomputing environment; the promiscuous inter-networking of computers creating the potential for proliferating failure modes; the inadequate training of information workers; and the lack of robust processes for the identification of system components, including users. By far the most significant is the practice of basing important military, economic and social functions on poorly designed and configured information systems, and staffing these systems with skill-deficient personnel. These personnel often pay little attention to or have no understanding of the operational consequences of information system failure, loss of data integrity, or loss of data confidentiality.

Information warfare defense is not cheap, nor can it be easily obtained. It will take resources to develop the tools, processes, and procedures needed to ensure the availability of information and integrity of information, and to protect the confidentiality of information where needed. Additional resources will be needed to develop design guidelines for system and software engineers to ensure information systems that can operate in an information warfare environment. More resources will be needed to develop robust means to detect when insiders or intruders with malicious intent have tampered with our systems and to have a capability to undertake corrective actions and restore the systems.

Note that the appropriate investment in an information warfare defense capability has no correlation with the investment that may have been made to obtain an offensive information warfare capability. Information warfare defense encompasses the planning and execution of activities to blunt the effects of an offensive information warfare attack. However, the value of an investment in information warfare defense is not a function of the cost of the information or information system to be protected. Rather, the value of the defense is a function of the value to the defender of an information-based activity or process that may be subject to an information warfare attack.

If the defender leaves unprotected vital social, economic, and defense functions that depend upon information services, then the defender invites potential adversaries to make an investment in an offensive information warfare capability to attack these functions. To provide a robust deterrent against such an attack, an information-dependent defender should invest wisely in a capability to protect and restore vital functions and processes and demonstrate that the information services used are robust and resilient to attack.

Part of the challenge is that the rate of technology change is such that most systems designers and in system engineers have their hands full just trying to keep up -- never mind learning and applying totally new security design practices. But the lack of such steps can cost. The organized criminals that recently made a successful run at one of the major U.S. banks spent 18 months of preparation, including downloading application software and the e-mail of the software designers, before they started to transfer funds electronically.

It will cost even more, as well as raise significant issues of privacy and the role of the government, to design a warning system for major institutions of society such as the banks or air traffic control. Such a warning system should, as a minimum, provide tactical warning of and help in the characterization of attacks mounted through the information infrastructure.

Probably the biggest obstacle will be the difficulty in convincing people-whether in commerce, in the military, or in government of the need to examine work functions and operating processes. This examination should uncover unintentional dependencies on the assumed proper operation of information services beyond their control.

2.3 THE INFRASTRUCTURE

What is the National Information Infrastructure (NII)? The phrase "information infrastructure" has an expansive meaning. The NII includes more than just the physical facilities used to transmit, store, process, and display voice, data, and images. It encompasses a wide range and ever-expanding range of equipment: cameras, scanners, keyboards, telephones, fax machines, computers, switches, compact disks, video and audio tape, cable, wire, satellites, optical fiber transmission lines, microwave nets, switches, televisions, monitors, printers, and much more.

The NII is not a cliff that suddenly confronts us, but rather a slope-one that society has been climbing since postal services and semaphore networks were established. An information infrastructure has existed for a long time, continuously evolving with each new advance in communications technology. What is different is that today we are imagining a future when all the independent infrastructures are combined. An advanced information infrastructure will integrate and interconnect these physical components in a technologically neutral manner so that no one industry will be favored over any other. Most importantly, the NII requires building foundations for living in the Information Age and for making these technological advances useful to the public, business, libraries, and other nongovernmental entities. That is why, beyond the physical components of the infrastructure, the value of the NII to users and the nation will depend in large part on the quality of its other elements:

We call out domains within this infrastructure by names that reflect the interest of the user: the Defense Information Infrastructure of the defense community; the National Information Infrastructure of the United States; the complex, interconnected Global Information Infrastructure of the future described so well to the Task Force by the representatives of the Central Intelligence Agency. The reality is that almost all are interconnected.

DoD has over 2.1 million computers, over 10,000 LANS, and over 100 long-distance networks. DoD depends upon computers to coordinate and implement aspects of every element of its mission, from designing weapon systems to tracking logistics. In field testing, DISA has determined that at least 65 percent of DoD unclassified systems are vulnerable to attack. Consider how this state come about.

The early generations of computer systems presented relatively simple security challenges. They were expensive, they were isolated in environmentally controlled facilities; and few understood how to use them. Protecting these systems was largely a matter of physical security controlling access to the computer room and of clearing the small number of specialists who needed such access.

As the size and price of computers were reduced, microprocessors began to appear in every workplace, on the battlefield and embedded in weapons systems. Software for these computers is written by individuals and firms scattered across the globe. Connectivity was extended, first to remote terminals, eventually to local- and wide-area communications networks, and now to global coverage. What was once a collection of separate systems is now best understood as a dynamic, ever-changing, collection of subscribers using a large, multifaceted information infrastructure operating as a virtual utility.

These legacy computer systems were not designed to withstand second-, third-, or "n"-order-level effects of an offensive information warfare attack. Nor is there evidence that the computer systems presently under development will provide such protection. The cost for "totally hardened" systems is prohibitive. Security criteria at present presume that computing can be protected at its perimeter, primarily through the encryption of telecommunications links. However, internal security may be more important than perimeter defense.

It is not necessary to break the cryptographic protection used to protect telecommunications and data to attack classified computing environments. The legacy protection paradigm used by DoD was based upon the classification of information. However, most classified computer systems contain, and often rely on, unclassified information. This unclassified information often has little or no protection of the data integrity prior to entry into classified systems. The expected interaction between GCCS and GTN is an example of this. An increasing number of DoD systems contain decision aids and other event driven modules that, unless buffered from unclassified data whose integrity cannot be verified, are at risk.

To cope with this new reality, the approach for managing information security must shift from developing security for each individual system and network to developing security for subscribers within the worldwide utility; and from protecting isolated systems owned by discrete users to protecting distributed, shared systems that are interconnected and depend upon an infrastructure that individual subscribers neither own nor control.

Successful protection policies within this global structure must be sufficiently flexible to cover a wide range of systems and equipment from local area networks to worldwide networks, and from laptop computers to massively parallel processing supercomputers. They must take into account threat, both from the insider and the outsider, and must espouse a philosophy of risk management in making security decisions.

These protection challenges are made more difficult by the rapid technological and regulatory changes under way in the distributed computing environment. The Telecommunications Act of 1996 is reshaping all aspects of interconnected communications in the United States. Similar movements toward deregulation are under way across the globe. Into this regulatory turmoil technology is introducing new services based on a bevy of competing waveforms and protocols for use over copper, coaxial, glass, and wireless mediums. To date, it is not possible to predict how fragile or how robust the communications infrastructure will be in the near term -- let alone the far future.

New computing technologies are being integrated into distributed computing environments on a large scale even though the fragility of these technologies is not understood. Recent examples include the post-deployment security flaws found in Netscape Navigator and in Java applets; the ongoing market struggle to dominate the building blocks for World Wide Web applications formed from collections of objects distributed across clients and servers that is under way between the Object Management Group's Common Object Request Broker Architecture and Microsoft Corporation's Distributed Common Object Model (each with a different approach to security); and a proposed future where Microsoft would automatically deliver and install software updates onto the customer's desktop without the customer's active involvement.

These environmental factors have serious implications for information warfare defense. Within this rapidly changing, globally interconnected environment of telecomputing activities it is not possible for a person to identify positively who is interconnected with him or her or know the exact path a message and voice traffic takes as it transits the telecommunications "cloud." It is not possible to know technically or at the logical level how the various software components on a computer- including the distributed applets downloaded, used, and discarded-interact together. It is not possible to know for sure if the various components installed in the computer hardware only do what is asked of them. Finally, it is certainly not possible to know for certain if a co-worker who shares authorized access to a telecomputing environment is behaving appropriately.

In sum, we have built our economy and our military on a technology foundation that we do not control and which, at least at the fine detail level, we do not understand.

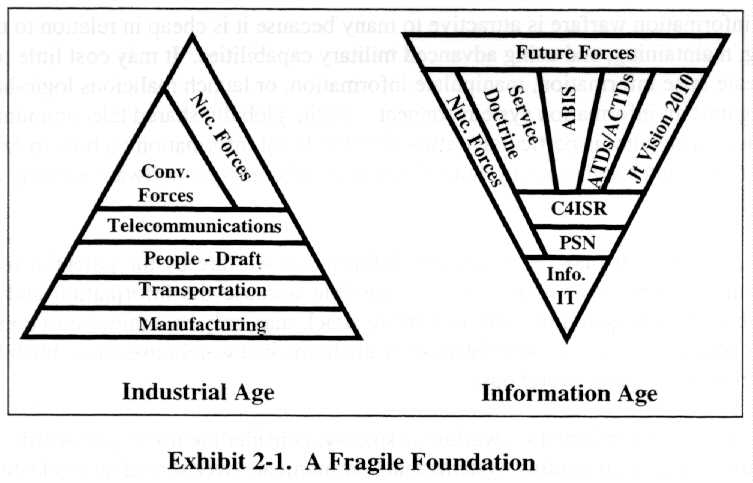

A few words about the environment are important to set the stage for later discussions. DoD's information infrastructure is a part of a larger national and global information infrastructure. These interconnected and interdependent systems and networks are the foundation for critical economic, diplomatic, and military functions upon which our national and economic security are dependent. Exhibit 2-2 shows a few examples of those functions, the importance of information and the information infrastructure to each, and the criticality of functions such as coalition building in responding to a regional crisis.

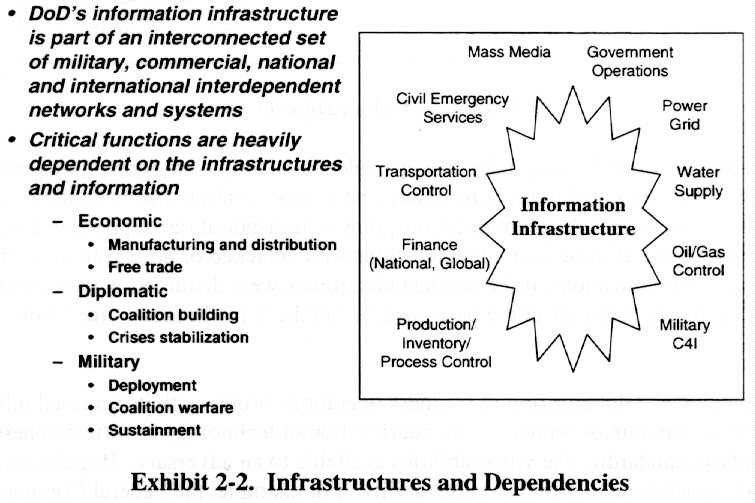

The United States is an information and information systems dominated society. Because of its ever-increasing dependence on information and information technology, the United States is one of the most vulnerable nations to information warfare attacks. The United States and its infrastructures are vulnerable to a variety of threats ranging from rogue hackers for hire to coordinated transnational and state-sponsored efforts to gain some economic, diplomatic or military advantage. Exhibit 2-3 depicts some of the vulnerabilities.

The military implications of this dependency was made abundantly clear when it was suggested in one of the briefings presented to the Task Force that points of failure had been identified for each of three infrastructures (telecommunications, power, transportation) supporting a key port city in the United States. If these individual locations were attacked or destroyed, or in the case of power and telecommunications, if the resident electronics were disturbed, it would impact the ability of military forces to deploy at the pace specified in the Time Phased Force Deployment List.

And it is getting worse. Globalization of business operations brings with it increased information and information system interdependence. Standardization of technology for effectiveness and economies tends to standardize the vulnerabilities available to an adversary. Regulation and deregulation also contribute to growing vulnerability. For example, the Federal Communications Commission has mandated an evolution toward open network architectures concept which has as its goal the equal, user-transparent access via public networks to network services provided by network-based and non-network enhanced service providers. However, in execution, the concept makes network control software increasingly accessible to the users-and the adversaries. Implementation of the Telecommunications Act of 1996 will also require the carriers to collocate key network control assets and to increase the number of points of interconnection among the carriers. The Act also mandates third-party access to operations support systems, providing even more possible points of access to the critical infrastructure control functions. Similarly, the Federal Energy Regulatory Commission's recent Orders 888 and 889 directed the deregulation of the electric power industry. As part of Order 889, the electric utilities are required to establish an Open Access Same-time Information System (OASIS) using the Internet as the backbone.

Exhibit 2-4 illustrates the variety of network and computer system vulnerabilities which can be exploited, starting with simply making too much information available to too many people. The number of holes is mind-boggling -- an indication of the complexity and depth of defensive information warfare task!

Human factors - Information freely available Authentication-based - Password sniffing/cracking Data driven -Directing E-mail to a program -Embedded programming languages

-Remotely accessed software Software-based -Viruses Protocol-based -Weak authentication Denials of service -Network flooding Cryptosystem weakness -Inadequate key size/characteristics Key Management -Deducing key |

Exhibit 2-4. Vulnerabilities/Exploitation Techniques

Take, for example, "Remotely accessed software," which is found under "Data Driven." Distributed software objects, such as JAVA and Active-X, are the wave of the future. Rather than having software reside permanently in workstations or desktop computers, the Internet will make applications and data available as needed. The applications and data are deleted from the workstations or desktop computers after use. The danger of this just-in-time support is that the user has no idea as to what might be hidden in the code. Another aspect of distributed computing is that the definition of system boundaries becomes very blurred. This suggests considerable future difficulty in defining what can and cannot be monitored for self- protection, an implication discussed in Section 6.1 1, Resolve the Legal Issues, with legal recommendations.

The implication is that a risk management process is needed to deal with the inability to close all of the holes. Since this subject has been treated extensively by other study efforts (e.g., the Joint Security Commission) the Task Force elected not to examine risk management.

2.4 THREAT

There is ample evidence from the Defense Information Systems Agency and the General Accounting Office of the presence of intruders in DoD unclassified systems and networks. Briefings and reports to the Task Force have reinforced the DISA experience. Exhibit 2-5 shows some of the threats involved.

- Services and DISA experience

- CIA, DIA, and NSA briefings

- NCS and NSTAC Growing interest in sharing sensitive information

|

Exhibit 2-5. The Threat is Real

The "1996 CSI/FBI Computer Crime and Security Survey," released to the public earlier this year, concluded that "there is a serious problem" and cited a growing number of attacks ranging from "data diddling" to scanning, brute-force password attacks, and denial of service. The National Communications System and the President's National Security Telecommunications Advisory Committee have been warning since 1989 that the public switched network is growing more vulnerable and is experiencing a growing number of penetrations. There is also a growing interest in sharing sensitive vulnerability information among private sector companies, among government agencies, and between government and the private sector. However, sometimes the technology success we have achieved and our faith in our technological superiority blinds us to the growing threat and to our own vulnerabilities. Exhibit 2-6 depicts the Task Force view of the threat.

- |

Validated* |

Existence |

Likely |

Beyond |

| Incompetent | W |

- |

- |

- |

| Hacker | W |

- |

- |

- |

| Disgruntled Employee | W |

- |

- |

- |

| Crook | W |

- |

- |

- |

| Organized Crime | L |

- |

W |

- |

| Political Dissident | - |

W |

- |

- |

| Terrorist Group | - |

L |

W |

- |

| Foreign Espionage | L |

- |

W |

- |

| Tactical Countermeasures | - |

W |

- |

- |

| Orchestrated Tactical IW | - |

- |

L |

W |

| Major Strategic Disruption of U.S. | - |

- |

- |

L |

* Validated by DIA W = Widespread; L = Limited

Exhibit 2-6. Threat Assessment

The incompetent threat is an amateur that by some means (perhaps by following a hacker recipe or by accident) manages to perform some action that exploits or exacerbates a vulnerability. This category could include a poorly trained systems administrator who assigns privilege groups incorrectly, which would then allow a more nefarious threat to claim more privileges on a system than would be warranted.

The hacker threat implies a person with more technical knowledge who to some degree understands the processes used and has the intent to violate the security or defenses of a target to one degree or another. The hacker threat is broad in motivation, ranging from those who are mostly just curious to those who commit acts of vandalism.

The disgruntled employee threat is the ultimate insider threat: the individual who is inside the organization and trusted. This threat is the most difficult to detect because insiders have legitimate access.

When examining the potential for information warfare activities, the potential for a criminal or nongovernmental attack for economic purposes must be considered. Information is the basis for the global economy. Money is information; only approximately 10 percent of the time does it exist in physical form. As information systems are increasingly used for financial transactions at all levels, it is natural to expect all levels of criminals to target information systems in order to achieve some gain.

The increasing interconnectivity of information systems makes them a tempting target for political dissidents. Activities of interest to this group include spreading the basic message of their cause by a variety of means as well as inviting others to actions. An example is the political dissident in this country who sent out e-mails urging folks to send e-mail bombs to the White House server.

By attacking those targets in a highly visible way, the terrorist hopes to cause the media to provide a great deal of publicity of the action, thereby further disseminating the message of fear and uncertainty.

A significant threat that cannot be discounted includes activities engaged on behalf of competitor states. The purpose behind such attacks could be an attempt to influence U.S. policy by isolated attacks; foreign espionage agents seeking to exploit information for economic, political, or military intelligence purposes; the application of tactical countermeasures intended to disrupt a specific U. S. military weapon or command system; or an attempt to render a major catastrophic blow to the United States by crippling the National Information Infrastructure.

It is necessary to distinguish between what a layman might consider a "major disruption," such as the three New York airports simultaneously being inoperable for hours; and a "strategic" impact in which both the scope and duration are of dramatically broader disruptions. The latter is likely to occur at a time in which other contemporaneous events make the impact potentially "strategic," such as during a major force deployment.

The Task Force struggled with the issue of what would truly constitute a "strategic attack" or "strategic" impact upon the United States. The old paradigms of "n" nuclear weapons, or threats to "overthrow the United States per se," were marginally helpful in understanding the degree to which we are vulnerable today to Information Warfare attack in all of its dimensions. Couple this issue with the difficulty in assessing the real impact of cascading effects through our infrastructures; on the one hand as being major nuisances and inconveniences to our way of life, or on the other hand, as literally threatening the existence of the United States itself, or threatening the ability of the United States to mount its defenses.

The Task Force concluded that, in this new world, an event or series of events would be considered strategic either because the impact was so broad and pervasive, or because the events occurred at times and places which affected (or could affect) our ability to conduct our necessary affairs. One example we used to illustrate this latter point was a disruption in the area phone, power, and transportation systems coincident with our attempts to embark and move major military forces through that area to points abroad.

Few members of the Task Force felt that the power failures in several contiguous Southwestern states this summer were a "major disruption" or of "strategic impact" on the United States. Clearly they were inconveniences. However, had we reason to believe that the outages had been knowingly orchestrated by adversaries of the United States, this nation would have been outraged.

An issue related to our perceived vulnerabilities is the ability of an adversary to actually plan and execute Information Warfare so that it creates the desired impact. Our Task Force had many enlightening discussions about the potential for effects to cascade through one infrastructure (such as the phone system) into other infrastructures. This example is particularly important because most of our other infrastructures rides on the phone system. No one seems to know quite how, where, or when effects actually would cascade; nor what the total impact might be. The Threat and Vulnerabilities Panel concluded that if, with all the knowledge we have about our own systems, we are unable to determine the degree to which effects would multiply and cascade; an adversary would have a far more difficult task of collecting and assessing detailed intelligence of literally hundreds, if not thousands, of networked systems in order to plan and successfully execute an attack of the magnitude which we would consider to be "strategic." The very complexity and heterogeneity of today's systems provide a measure of protection against catastrophic failure, by not being susceptible to the same precise attacks. Presumably, the more kinds of attacks required, the harder it would be to induce cascading effects that would paralyze large segments of this nation. This is not to say that significant mischief is unlikely. It does suggest that the risk of an adversary planning and predicting the intended results at the times and places needed to truly disrupt the United States is considered low for approximately the next decade.

The trade and news media regularly report on the penetration of businesses and financial institutions by organized crime to steal funds, the theft of telecommunications services, the theft of money via electronic funds transfer, and the theft of intellectual property to include foreign government-sponsored theft and transfer to offshore competitors of intellectual property from U.S. manufacturing firms.

The media also reports instances of disgruntled employees, contract employees, and ex-employees of firms using their access and knowledge to destroy data, to steal information, to conduct industrial espionage, invade privacy-related records for self-interest and for profit, and to conduct fraud. (An MCI employee electronically stole 60,000 credit card numbers from an MCI telephone switch and sold them to an international crime ring. MCI estimated the loss at $50 million.) Malicious activity by "insiders" is one of the most difficult challenges to information assurance.

DISA reported that it responded to 255 computer security incidents in 1994 and to 559 incidents in 1995. Of these, 210 were intrusions into computers, 31 were virus incidents, and 39 fell into another category. This is probably just the tip of a very large iceberg. Last year, DISA personnel used "hacker-type" tools to attack 26,170 unclassified DoD computers. They found that 3.6 percent of the unclassified computers tested were "easily" exploited using a "front door" attack because the most basic protection was missing and that 86 percent of the unclassified computers tested could be penetrated by exploiting the trusted relationships between machines on shared networks. Worse, 98 percent of the penetrations were not detected by the administrators or users of these computers. In the 2 percent of the cases where the intrusion was detected, it was only reported 5 percent of the time. This works out to be less than one in a thousand intrusions are both detected and reported. These detection and reporting statistics suggest that up to 200,000 intrusions might have been made into DoD's unclassified computers during calendar year 1995.

Whatever the number, unknown intruders have been routinely breaking into unclassified DoD computers, using passwords and user identities stolen from the Internet, since late 1993. Once the intruders enter the computers masquerading as the legitimate users, they install "back doors" so that they can always get back into the computer. These intruders have gained access to computers used for research and development in a variety of fields: inventory and property accounting, payroll and business support, supply, maintenance, e-mail files, procurement, health systems, and even the master clock for one-fourth of the world. They have modified, stolen, and destroyed data and software and have shut down computers and networks.

Such intrusions are not limited to DoD. Information age "electronic terrorists" have penetrated commercial computers and data-flooded or "pinged" network connections to deny service and destroy data to further their cause: an environmental group sponsored such attacks to call attention to their message and to punish a business with which they disagreed.

In the early 1980s an intruder required a high level of technical knowledge to successfully penetrate computers. By the early 1990s automated tools for disabling audits, stealing passwords, breaking into computers, and spoofing packets on networks were common. These tools are easy to use and do not require much technical expertise. Most have a friendly graphical user interface (GUI); automated attacks can be initiated with a simple click on a computer mouse.

Such tools include:

RootKit - a medium technology software command language package which, when run on a UNIX computer, will allow complete access and control of the computer's data and network interfaces. If this computer is attached to a privileged network, the network is now in control of the RootKit tool set user.SATAN - a medium technology software package designed to test for several hundred vulnerabilities of UNIX-based network systems, especially those which are client/server. However, the tool goes beyond the testing and grants

WatcherT - a high technology Artificial Intelligence engine, which is rumored to have been created by an international intelligence agency. It is designed to look for several thousand vulnerabilities in all kinds of computers and networks including PCs, UNIX (client/server) and mainframes.

More sophisticated attacks include plain text encryption of programs and messages, that is using plain text to hide malicious code; disabling of audit records; mounting attacks that are encrypted and that come from multiple points to defeat security detection mechanisms; hiding software code in graphic images or within spreadsheets or word processing documents; the insertion, over time and by multiple paths, of multi-part software programs; the physical compromise of nodes, routers, and networks; the spoofing of addresses; the eavesdropping (installing "sniffers" on Internet routers) on telecommunications and networks to obtain addresses and passwords for subsequent downstream spoofing; and the modifications of packet transmissions on networks.

Hackers with a bent to cyber crime are actively recruited by both organized crime and unethical business men, including private investigators who want to access privacy-protected information. Such recruiting was intense at the hacker convention DEFCON III, held August 4 to 6, 1995, in Las Vegas. Such conventions also serve as a clearing house for hacker tradecraft. At DEFCON III sessions were held on hacking the latest communications protocols (ATM and Frame Relay); the development and distribution of polymorphic software code (code that dynamically changes and adapts to the computer it is installed on); the penetration of health maintenance organizations and insurance companies; and the vulnerabilities of telephone systems. New services such as electronic commerce, cyber cash, mobile computing, and personal communications services are already areas of intense criminal interest.

The hackers and the cyber criminals are very efficient. The current state of technology favors the attackers, who need only minimal resources to accomplish their objectives. They have accumulated considerable knowledge of various devices and commercial software by examining unprotected sites. This know-how and tradecraft is transportable and is shared on the 400-plus hacker bulletin boards, worldwide. This includes hacker bulletin boards sponsored by governments (for example, the French intelligence service sponsors such a board). These boards are also used to distribute very sophisticated user-friendly "point-and-click" hacker tools that enable even amateurs to attack computers with a high degree of success.

A CD-ROM entitled The Hacker Chronicles, Vol II, produced by P-80 Systems and available at hacker shows for $49.95, contains hundreds of megabytes of "hacker" and information security information including automated tools for breaking into computers. The package carries this warning notice:

The criminal acts described on this disk are not condoned by the publishers and should not be attempted. The information itself is legal, while the usage of such information may be illegal. The Hacker Chronicles is for information and educational purposes only. All information in this compilation was legally available to the public [readily available on the Internet] prior to this publication.

Attacks are not just based on the use of smart tools. Simple social engineering-impersonation and misrepresentation to obtain information-remains very productive. The ruses are many: "cyber friend," providing a free software upgrade that has been doctored to circumvent security, a "customer" demanding and receiving support over the telephone from a customer-oriented firm.

Additional details on the Task Force assessment of the threat are provided in Appendix A. Threat Assessment.

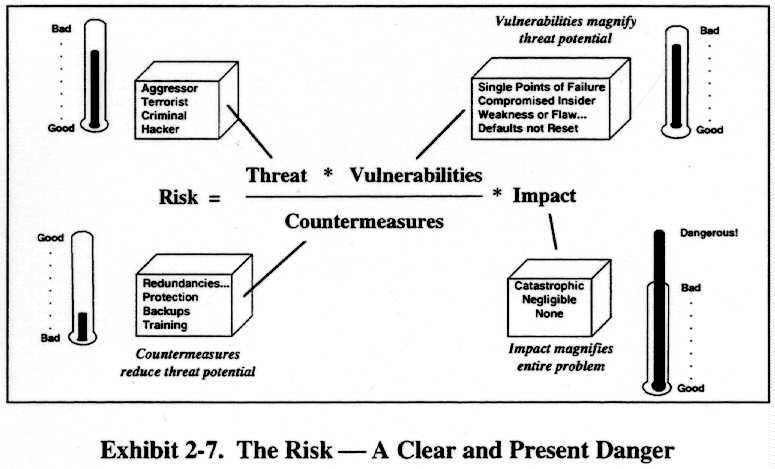

The nature of the danger is evident in an assessment of the current risk, which is based on the presence of a threat; the vulnerabilities of our networks and computing systems; the measures available to counter an attack; and the impact resulting from the loss of critical information, information systems, or information networks. This is depicted in Exhibit 2-7.

The Task Force believes that the overall risk is significant because of the following factors:

- The current threat is significant

- The vulnerabilities are numerous

- The countermeasures are extremely limited

- The impact of loss of portions of the infrastructure could have catastrophic effects on the ability of the Department to fulfill its missions.

[End Section 2.0]